THE IS SHARED ONLINE JOURNAL.

AUTHOR.MR.FELIX ATI-JOHN

ACADEMIC WRITING

SUBJECT - CONSERVATORHIP LAWS IN ELDERLY OR OLDER PEOPLE HELP

AND ADULT PSYCHIATRY

Standard of living

From Wikipedia, the free encyclopedia

| This article needs additional citations for verification. (February 2011) |

Measurement[edit]

Standard of living is generally measured by standards such as real (i.e. inflation adjusted) income per person and poverty rate. Other measures such as access and quality of health care, income growth inequality, and educational standards are also used. Examples are access to certain goods (such as number of refrigerators per 1000 people), or measures of health such as life expectancy. It is the ease by which people living in a time or place are able to satisfy their needs and/or wants.[citation needed]The idea of a 'standard' may be contrasted with the quality of life, which takes into account not only the material standard of living, but also other more intangible aspects that make up human life, such as leisure, safety, cultural resources, social life, physical health, environmental quality issues, etc. More complex means of measuring well-being must be employed to make such judgements, and these are very often political, thus controversial. Even between two nations or societies that have similar material standards of living, quality of life factors may in fact make one of these places more attractive to a given individual or group.

Criticism[edit]

However, there can be problems even with just using numerical averages to compare material standards of living, as opposed to, for instance, a Pareto index (a measure of the breadth of income or wealth distribution). Standards of living are perhaps inherently subjective. As an example, countries with a very small, very rich upper class and a very large, very poor lower class may have a high mean level of income, even though the majority of people have a low "standard of living". This mirrors the problem of poverty measurement, which also tends towards the relative. This illustrates how distribution of income can disguise the actual standard of living.Likewise Country A, a perfectly socialist country with a planned economy with very low average per capita income would receive a higher score for having lower income inequality than Country B with a higher income inequality, even if the bottom of Country B's population distribution had a higher per capita income than Country A. Real examples of this include former East Germany compared to former West Germany or Croatia compared to Italy. In each case, the socialist country has a low income discrepancy (and therefore would score high in that regard), but lower per capita incomes than a large majority of their neighboring counterpart.

See also[edit]

- Cost of living

- Income and fertility

- Total fertility rate

- Gini coefficient

- Human Development Index

- Index of Economic Freedom

- List of countries by Social Progress Index

- Median household income

- Where-to-be-born Index

- Right to an adequate standard of living

- Working hours

References[edit]

- Jump up ^ "Standard Of Living Definition". Investopedia.com. Retrieved 2011-11-05.

- Jump up ^ http://hdr.undp.org/en/2013-report

External links[edit]

|

Health human resources

From Wikipedia, the free encyclopedia

Health human resources (“HHR”) — also known as “human resources for health” (“HRH”) or “health workforce” — is defined as “all people engaged in actions whose primary intent is to enhance health”, according to the World Health Organization's World Health Report 2006.[1] Human resources for health are identified as one of the core building blocks of a health system.[2] They include physicians, nurses, advanced practice registered nurses, midwives, dentists, allied health professions, community health workers, social health workers and other health care providers, as well as health management and support personnel – those who may not deliver services directly but are essential to effective health system functioning, including health services managers, medical records and health information technicians, health economists, health supply chain managers, medical secretaries, and others.

The field of health human resources deals with issues such as planning, development, performance, management, retention, information, and research on human resources for the health care sector. In recent years, raising awareness of the critical role of HRH in strengthening health system performance and improving population health outcomes has placed the health workforce high on the global health agenda.[3]

Shortages of skilled for health workers are also reported in many specific care areas. For example, there is an estimated shortage of 1.18 million mental health professionals, including 55,000 psychiatrists, 628,000 nurses in mental health settings, and 493,000 psychosocial care providers needed to treat mental disorders in 144 low- and middle-income countries.[4] Shortages of skilled birth attendants in many developing countries remains an important barrier to improving maternal health outcomes. Many countries, both developed and developing, report maldistribution of skilled health workers leading to shortages in rural and underserved areas.

Regular statistical updates on the global HHR situation are collated in the WHO Global Atlas of the Health Workforce.[5] However the evidence base remains fragmented and incomplete, largely related to weaknesses in the underlying human resource information systems (HRIS) within countries.[6]

In order to learn from best practices in addressing health workforce challenges and strengthening the evidence base, an increasing number of HHR practitioners from around the world are focusing on issues such as HHR advocacy, surveillance and collaborative practice. Some examples of global HRH partnerships include:

Many government health departments, academic institutions and related agencies have established research programs to identify and quantify the scope and nature of HHR problems leading to health policy in building an innovative and sustainable health services workforce in their jurisdiction. Some examples of HRH information and research dissemination programs include:

The WHO Workload Indicators of Staffing Need (WISN) is an HRH planning and management tool that can be adapted to local circumstances.[11] It provides health managers a systematic way to make staffing decisions in order to better manage their human resources, based on a health worker’s workload, with activity (time) standards applied for each workload component at a given health facility.

The field of health human resources deals with issues such as planning, development, performance, management, retention, information, and research on human resources for the health care sector. In recent years, raising awareness of the critical role of HRH in strengthening health system performance and improving population health outcomes has placed the health workforce high on the global health agenda.[3]

Contents

[hide]Global situation[edit]

The World Health Organization (WHO) estimates a shortage of almost 4.3 million physicians, midwives, nurses and support workers worldwide.[1] The shortage is most severe in 57 of the poorest countries, especially in sub-Saharan Africa. The situation was declared on World Health Day 2006 as a "health workforce crisis" – the result of decades of underinvestment in health worker education, training, wages, working environment and management.Shortages of skilled for health workers are also reported in many specific care areas. For example, there is an estimated shortage of 1.18 million mental health professionals, including 55,000 psychiatrists, 628,000 nurses in mental health settings, and 493,000 psychosocial care providers needed to treat mental disorders in 144 low- and middle-income countries.[4] Shortages of skilled birth attendants in many developing countries remains an important barrier to improving maternal health outcomes. Many countries, both developed and developing, report maldistribution of skilled health workers leading to shortages in rural and underserved areas.

Regular statistical updates on the global HHR situation are collated in the WHO Global Atlas of the Health Workforce.[5] However the evidence base remains fragmented and incomplete, largely related to weaknesses in the underlying human resource information systems (HRIS) within countries.[6]

In order to learn from best practices in addressing health workforce challenges and strengthening the evidence base, an increasing number of HHR practitioners from around the world are focusing on issues such as HHR advocacy, surveillance and collaborative practice. Some examples of global HRH partnerships include:

Health workforce research[edit]

Health workforce research is the investigation of how social, economic, organizational, political and policy factors affect access to health care professionals, and how the organization and composition of the workforce itself can affect health care delivery, quality, equity, and costs.Many government health departments, academic institutions and related agencies have established research programs to identify and quantify the scope and nature of HHR problems leading to health policy in building an innovative and sustainable health services workforce in their jurisdiction. Some examples of HRH information and research dissemination programs include:

- Human Resources for Health journal

- HRH Knowledge Hub, University of New South Wales, Australia

- Center for Health Workforce Studies, University of Albany, New York

- Canadian Institute for Health Information: Spending and Health Workforce

- Public Health Foundation of India: Human Resources for Health in India

- National Human Resources for Health Observatory of Sudan

- OECD Human Resources for Health Care Study

Health workforce policy and planning[edit]

In some countries and jurisdictions, health workforce planning is distributed among labour market participants. In others, there is an explicit policy or strategy adopted by governments and systems to plan for adequate numbers, distribution and quality of health workers to meet health care goals. For one, the International Council of Nurses reports:[7]The objective of HHRP [health human resources planning] is to provide the right number of health care workers with the right knowledge, skills, attitudes and qualifications, performing the right tasks in the right place at the right time to achieve the right predetermined health targets.An essential component of planned HRH targets is supply and demand modeling, or the use of appropriate data to link population health needs and/or health care delivery targets with human resources supply, distribution and productivity. The results are intended to be used to generate evidence-based policies to guide workforce sustainability.[8][9] In resource-limited countries, HRH planning approaches are often driven by the needs of targeted programmes or projects, for example those responding to the Millennium Development Goals.[10]

The WHO Workload Indicators of Staffing Need (WISN) is an HRH planning and management tool that can be adapted to local circumstances.[11] It provides health managers a systematic way to make staffing decisions in order to better manage their human resources, based on a health worker’s workload, with activity (time) standards applied for each workload component at a given health facility.

Global Code of Practice on the International Recruitment of Health Personnel[edit]

The main international policy framework for addressing shortages and maldistribution of health professionals is the Global Code of Practice on the International Recruitment of Health Personnel, adopted by the WHO's 63rd World Health Assembly in 2010.[12] The Code was developed in a context of increasing debate on international health worker recruitment, especially in some higher income countries, and its impact on the ability of many developing countries to deliver primary health care services. Although non-binding on Member States and recruitment agencies, the Code promotes principles and practices for the ethical international recruitment of health personnel. It also advocates the strengthening of health personnel information systems to support effective health workforce policies and planning in countries.See also[edit]

- Health systems

- Health care providers

- Human resources for health information systems

- Interprofessional education and collaborative practice in health care

- Physician shortage / Nursing shortage

- Human Resources for Health, open access journal

- Canada’s Health Care Providers, 2007, published by the Canadian Institute for Health Information

- NHS National Workforce Projects, part of the English National Health Service

References[edit]

- ^ Jump up to: a b World Health Organization. The world health report 2006: working together for health, Geneva, 2006 – http://www.who.int/whr/2006

- Jump up ^ World Health Organization. Health Systems Topics http://www.who.int/healthsystems/topics/en/index.html

- Jump up ^ Grepin K, Savedoff WD. "10 Best Resources on ... health workers in developing countries." Health Policy and Planning, 2009; 24(6):479–482 http://heapol.oxfordjournals.org/cgi/content/full/24/6/479

- Jump up ^ Scheffler RM et al. Human resources for mental health: workforce shortages in low- and middle-income countries. Geneva, World Health Organization, 2011 – available on http://whqlibdoc.who.int/publications/2011/9789241501019_eng.pdf

- Jump up ^ World Health Organization. Global Atlas of the Health Workforce [online database] – available on http://www.who.int/globalatlas/autologin/hrh_login.asp

- Jump up ^ Dal Poz MR et al. (eds). Handbook on monitoring and evaluation of human resources for health, Geneva, World Health Organization, 2009 – available on http://www.who.int/hrh/resources/handbook/en/index.html

- Jump up ^ International Council of Nurses. Health human resources planning. Geneva, 2008 – http://www.ichrn.com/publications/factsheets/Health-Human-Resources-Plan_EN.pdf

- Jump up ^ Dal Poz MR et al. Models and tools for health workforce planning and projections. Geneva, World Health Organization, 2010 – http://whqlibdoc.who.int/publications/2010/9789241599016_eng.pdf

- Jump up ^ Health Canada. Health Human Resource Strategy (HHRS). Accessed 12 April 2011.

- Jump up ^ Dreesch N et al. "An approach to estimating human resource requirements to achieve the Millennium Development Goals." Health Policy and Planning, 2005; 20(5):267–276.

- Jump up ^ World Health Organization. Workload Indicators of Staffing Need (WISN): User's manual. Geneva, 2010 – http://www.who.int/hrh/resources/wisn_user_manual/en/

- Jump up ^ International recruitment of health personnel: global code of practice. Resolution adopted by the Sixty-third World Health Assembly, Geneva, May 2010 – available on http://apps.who.int/gb/e/e_wha63.html

External links[edit]

- World Health Organization programme of work on health human resources

- Human Resources for Health Databases, Canadian Institute for Health Information

- Human resources for health in developing countries – a dossier from the Institute for Development Studies

- Compendium of tools and guidelines for HRH situation analysis, planning, policies and management systems

- Online community of practice for HRH practitioners on strengthening health workforce information systems

- Human Resources for Health Global Resource Center- largest online collection of HRH research and materials, supported by the IntraHealth International-led CapacityPlus project

- HRIS strengthening implementation toolkit

- Africa Health Workforce Observatory

- CapacityPlus--the USAID-funded global project uniquely focused on the health workforce needed to achieve the Millennium Development Goals.

MEDICAL RESTRAINT-

MEDICAL RESTRAINT ARE GENERALLY USED TO PREVENT PEOPLE WITH

SEVERE PHYSICAL OR MENTAL DISORDERS FROM

HARMING THEMSELVES OR OTHERS-A MAJOR GOAL OF MEDICAL RESTRAINTS IS TO

PREVENT INJURIES DUE TO FALLS:OTHE MEDICAL RESTRAINTS ARE INTENDED TO

PREVENT A HARMFUL BEHAVIOUR SUCH AS

HITTING OTHERS:EXCEPT WIKIPEDIA LIBRARY

CONSTRUCT VALIDITY AND

CONTENT VALIDITY OF THE MEASURE OF

MEDICAL RESTRAINTS

Medical restraint

From Wikipedia, the free encyclopedia

Medical restraints are physical restraints used during certain medical procedures. Medical restraints are designed to restrain patients with the minimum of discomfort and pain and to prevent patients injuring themselves or others.

There are many kinds of mild, safety-oriented medical restraints which are widely used. For example, the use of bed rails is routine in many hospitals and other care facilities, as the restraint prevents patients from rolling out of bed accidentally. Newborns frequently wear mittens to prevent accidental scratching. Some wheelchair users use a belt or a tray to keep them from falling out of their wheelchairs. In fact, not using these kinds of restraints when needed can lead to legal liability for preventable injuries.[1]

Medical restraints are generally used to prevent people with severe physical or mental disorders from harming themselves or others. A major goal of most medical restraints is to prevent injuries due to falls. Other medical restraints are intended to prevent a harmful behavior, such as hitting people.

Ethically and legally, once a person is restrained, the safety and well being of the restrained person falls upon the restrainer, appropriate to the type and severity of the restraining method. For example, a person who is placed in a secured room should be checked at regular intervals for indications of distress. At the other extreme, a person who is rendered semi-conscious by pharmacological (or chemical) sedation should be constantly monitored by a well-trained individual who is dedicated to protecting the restrained person's physical and medical safety. Failure to properly monitor a restrained individual may result in criminal and civil prosecution, depending on jurisdiction.

Although medical restraints, used properly, can help prevent injury, they can also be dangerous. The United States Food and Drug Administration (FDA) estimated in 1992 that at least 100 deaths occur annually in the U.S. from their improper use in nursing homes, hospitals and private homes. Most of the deaths are due to strangulation. The agency has also received reports of broken bones, burns and other injuries related to improper use of restraints.

Because of the potential for abuse, the use of medical restraints is regulated in many jurisdictions. At one time in California, psychiatric restraint was viewed as a treatment. However, with the passing of SB-130, which became law in 2004, the use of psychiatric restraint(s) is no longer viewed as a treatment, but can be used as a behavioral intervention when an individual is in imminent danger of serious harm to self or others.[2]

Adverse Effects of Physical Restraints: Throughout the last decade or so, there has been an increasing amount of evidence and literature supporting the idea of a restraint free environment due to their contradictory and dangerous effects.[3] This is due to the adverse outcomes associated with restraint use, which include: falls and injuries, incontinence, circulation impairment, agitation, social isolation, and even death[4]

There are many kinds of mild, safety-oriented medical restraints which are widely used. For example, the use of bed rails is routine in many hospitals and other care facilities, as the restraint prevents patients from rolling out of bed accidentally. Newborns frequently wear mittens to prevent accidental scratching. Some wheelchair users use a belt or a tray to keep them from falling out of their wheelchairs. In fact, not using these kinds of restraints when needed can lead to legal liability for preventable injuries.[1]

Medical restraints are generally used to prevent people with severe physical or mental disorders from harming themselves or others. A major goal of most medical restraints is to prevent injuries due to falls. Other medical restraints are intended to prevent a harmful behavior, such as hitting people.

Ethically and legally, once a person is restrained, the safety and well being of the restrained person falls upon the restrainer, appropriate to the type and severity of the restraining method. For example, a person who is placed in a secured room should be checked at regular intervals for indications of distress. At the other extreme, a person who is rendered semi-conscious by pharmacological (or chemical) sedation should be constantly monitored by a well-trained individual who is dedicated to protecting the restrained person's physical and medical safety. Failure to properly monitor a restrained individual may result in criminal and civil prosecution, depending on jurisdiction.

Although medical restraints, used properly, can help prevent injury, they can also be dangerous. The United States Food and Drug Administration (FDA) estimated in 1992 that at least 100 deaths occur annually in the U.S. from their improper use in nursing homes, hospitals and private homes. Most of the deaths are due to strangulation. The agency has also received reports of broken bones, burns and other injuries related to improper use of restraints.

Because of the potential for abuse, the use of medical restraints is regulated in many jurisdictions. At one time in California, psychiatric restraint was viewed as a treatment. However, with the passing of SB-130, which became law in 2004, the use of psychiatric restraint(s) is no longer viewed as a treatment, but can be used as a behavioral intervention when an individual is in imminent danger of serious harm to self or others.[2]

Adverse Effects of Physical Restraints: Throughout the last decade or so, there has been an increasing amount of evidence and literature supporting the idea of a restraint free environment due to their contradictory and dangerous effects.[3] This is due to the adverse outcomes associated with restraint use, which include: falls and injuries, incontinence, circulation impairment, agitation, social isolation, and even death[4]

Contents

[hide]Types of medical restraints[edit]

There are many types of medical restraint:- Four-point restraints, fabric body holders, straitjackets are typically only used temporarily during psychiatric emergencies.

- Restraint masks to prevent patients from biting in retaliation to medical authority in situations where a patient is known to be violent.

- Lap and wheelchair belts, or trays that clip across the front of a wheelchair so that the user can't fall out easily, may be used regularly by patients with neurological disorders which affect balance and movement.

- All four side rails being in the upright position on a bed can be considered a restraint.

- Safety vests and jackets can be placed on a patient like any other vest garment. They typically have a long strap at each end that can be tied behind a chair or to the sides of a bed to prevent injury or to settle patients for satisfying basic needs such as eating and sleeping. Posey vests are commonly used with elderly patients who are at risk of serious injury from falling.

- Limb restraints to prevent unwanted activity in various limbs. They are wrapped around the wrists or ankles, and tied to the side of a bed, to prevent self-harm, harm to medical staff, or as punishment for physical resistance to medical authority.

- Mittens to prevent scratching are common for newborns, but may also be used on psychiatric patients or patients who manage to use their hands to undo limb restraints.

- A Papoose board can be used for babies and young children.

- Chemical restraints are drugs that are administered to restrict the freedom of movement or to sedate a patient.

- CCG (Crisis Consultant Group) Non-Violent Physical technique.

- The Mandt System.

- NAPPI (Non Abusive Psychological and Physical Intervention).

- NVCI (Non-Violent Crisis Intervention) techniques.

- PCM (Professional Crisis Management) from the Professional Crisis Management Association (www.pcma.com)

- ProACT (Professional Assault Crisis Training).

- TCI (Therapeutic Crisis Intervention).

Laws pertaining to medical restraints[edit]

Current United States law requires that most involuntary medical restraints may only be used when ordered by a physician. Such a physician's order, which is subject to renewal upon expiration if necessary, is valid only for a maximum of 24 hours.[5]See also[edit]

References[edit]

- Jump up ^ http://www.nursinglaw.com/syhp.htm

- Jump up ^ disabilityrightsca.org/OPR/PRAT2004/Restraint&Seclusion.ppt

- Jump up ^ Evans D, Wood J, Lambert L (Feb 2003). "Patient injury and physical restraint devices: a systematic review". Journal of Advanced Nursing 41 (3): 274–82. doi:10.1046/j.1365-2648.2003.02501.x. PMID 12581115.

- Jump up ^ Luo H, Lin M, Castle N (Feb 2011). "Physical restraint use and falls in nursing homes: a comparison between residents with and without dementia". American Journal of Alzheimer's Disease & Other Dementias 26 (1): 44–50. doi:10.1177/1533317510387585. PMID 21282277.

- Jump up ^ http://www.emedicine.com/emerg/topic776.htm

External links[edit]

- Cruzan, Susan (1992-06-16). "Patient Restraint Devices Can Be Dangerous". News Release. Food and Drug Administration. Archived from the original on 2008-01-29. Retrieved 2009-08-15.

- emedicine.com article: Restraints, by Herbert Wigder, MD and Mary S Matthews, RN, BSN, JD

| ||||||||||||||||||||||||||

Translational research

From Wikipedia, the free encyclopedia

Contents

[hide]Definitions of Translational Research[edit]

Translational research (also referred to as translational science) is defined by the European Society for Translational Medicine (EUSTM) as an interdisciplinary branch of the biomedical field supported by three main pillars: benchside, bedside and community. [1] The goal of TM is to combine disciplines, resources, expertise, and techniques within these pillars to promote enhancements in prevention, diagnosis, and therapies. Accordingly, TM is a highly interdisciplinary field, the primary goal of which is to coalesce assets of various natures within the individual pillars in order to improve the global healthcare system significantly.[2]Translational research applies findings from basic science to enhance human health and well-being. In a medical research context, it aims to "translate" findings in fundamental research into medical and nursing practice and meaningful health outcomes. Translational research implements a “bench-to-bedside”, from laboratory experiments through clinical trials to point-of-care patient applications,[3] model, harnessing knowledge from basic sciences to produce new drugs, devices, and treatment options for patients. The end point of translational research is the production of a promising new treatment that can be used with practical applications, that can then be used clinically or are able to be commercialized.[4]

As a relatively new research discipline, translational research incorporates aspects of both basic science and clinical research, requiring skills and resources that are not readily available in a basic laboratory or clinical setting. It is for these reasons that translational research is more effective in dedicated university science departments or isolated, dedicated research centres.[5] Since 2009, the field has had specialized journals, the American Journal of Translational Research and the Translational Research (journal) dedicated to translational research and its findings.

Translational research is broken down into five levels, T1 through to T5. T1 research, refers to the “bench-to-bedside” enterprise of translating knowledge from the basic sciences into the development of new treatments; and T2 research refers to translating the findings from clinical trials into everyday practice.[4]

Translational research includes two areas of translation. One is the process of applying discoveries generated during research in the laboratory, and in preclinical studies, to the development of trials and studies in humans. The second area of translation concerns research aimed at enhancing the adoption of best practices in the community. Cost-effectiveness of prevention and treatment strategies is also an important part of translational science.[4]

Comparison to basic research or applied research[edit]

Basic research is the systematic study directed toward greater knowledge or understanding of the fundamental aspects of phenomena and is performed without thought of practical ends. It results in general knowledge and understanding of nature and its laws.[6]Applied research is a form of systematic inquiry involving the practical application of science. It accesses and uses some part of the research communities' (the academia's) accumulated theories, knowledge, methods, and techniques, for a specific, often state, business, or client-driven purpose.[7]

In medicine, translational research is increasingly a separate research field. A citation pattern between the applied and basic sides in cancer research appeared around 2000.[8]

Challenges and criticisms of translational research[edit]

Critics of translational research point to examples of important drugs that arose from fortuitous discoveries in the course of basic research such as penicillin and benzodiazepines,[9] and the importance of basic research in improving our understanding of basic biological facts (e.g. the function and structure of DNA) that go on to transform applied medical research.[10]Examples of failed translational research in the pharmaceutical industry include the failure of anti-aβ therapeutics in Alzheimer's disease.[11] Other problems have stemmed from the widespread irreproducibility thought to exist in translational research literature.[12]

Translational research facilities[edit]

Although translational research is relatively new, it is being recognised and embraced globally. There are currently committed translational research institutes or research departments at the following locations:- The Center for Clinical and Translational Research at Virginia Commonwealth University, Richmond, Virginia, United States

- Translational Research Institute (Australia), Brisbane, Queensland, Australia.

- Translational Genomics Research Institute, Phoenix, Arizona, United States.

- Maine Medical Center in Portland, Maine, United States has a dedicated translational research institute.[13]

- Scripps Research Institute, Florida, United States, has a dedicated translational research institute.[14]

See also[edit]

- American Journal of Translational Research

- Personalized medicine

- Biomedical research

- Biological engineering

- Systems biology

- Translational Research Informatics

- Clinical trials

- Clinical and Translational Science

External links[edit]

- Translational Research Institute

- NIH Roadmap

- CTSA Awards

- Center for Translational Injury Research

- American Journal of Translational Research

- Center for Comparative Medicine and Translational Research

- Translational Research Institute for Metabolism and Diabetes

- OSCAT2012 : Conference on translational medicine

References[edit]

- Jump up ^ Cohrs, Randall J.; Martin, Tyler; Ghahramani, Parviz; Bidaut, Luc; Higgins, Paul J.; Shahzad, Aamir. "Translational Medicine definition by the European Society for Translational Medicine". New Horizons in Translational Medicine 2 (3): 86–88. doi:10.1016/j.nhtm.2014.12.002.

- Jump up ^ Cohrs, Randall J.; Martin, Tyler; Ghahramani, Parviz; Bidaut, Luc; Higgins, Paul J.; Shahzad, Aamir. "Translational Medicine definition by the European Society for Translational Medicine". New Horizons in Translational Medicine 2 (3): 86–88. doi:10.1016/j.nhtm.2014.12.002.

- Jump up ^ "What is Translational Science". http://tuftsctsi.org/. Tufts Clinical and Translational Science Institute. Retrieved 9 June 2015. External link in

|website=(help) - ^ Jump up to: a b c "The Meaning of Translational Research and Why It Matters". http://jama.jamanetwork.com/. Woolf, S.H., The Journal of the American Medical Association, JAMA 2008;299;211-213. Retrieved 3 June 2015. External link in

|website=(help) - Jump up ^ "Obstacles facing translational research in academic medical centers". http://www.fasebj.org/. Obstacles facing translational research in academic medical centres. Retrieved 12 June 2015. External link in

|website=(help) - Jump up ^ "What is basic research?" (PDF). National Science Foundation. Retrieved 2014-05-31.

- Jump up ^ Roll-Hansen, Nils (April 2009). Why the distinction between basic (theoretical) and applied (practical) research is important in the politics of science (PDF) (Report). The London School of Economics and Political Science. Retrieved November 30, 2013.

- Jump up ^ Cambrosio, Alberto; Keating, Peter; Mercier, Simon (December 2006), "Mapping the emergence and development of translational cancer research", European Journal of Cancer (Elsevier Ltd) 42 (28): 3140–3148, doi:10.1016/j.ejca.2006.07.020

- Jump up ^ Tone, Andrea (2009). The Age of Anxiety: the History of America's Love Affairs with Tranquilizers.

- Jump up ^ http://blogs.scientificamerican.com/the-curious-wavefunction/2012/11/26/the-perils-of-translational-research/

- Jump up ^ Koo, Edward. "Anti-aβ therapeutics in Alzheimer's disease: the need for a paradigm shift.". Cell Press. Retrieved 28 April 2014.

- Jump up ^ Prinz, Florian. "Believe it or not: how much can we rely on published data on potential drug targets?". Nature Publishing Group. Retrieved 28 April 2014.

- Jump up ^ "Maine Medical Center Research Institute attracts top scientists, licenses discoveries". http://www.mainebiz.biz/. Mainebiz. Retrieved 17 June 2015. External link in

|website=(help) - Jump up ^ "Translational Research Institute". https://www.scripps.edu. The Scripps Research Institute. Retrieved 17 June 2015. External link in

|website=(help) - Jump up ^ "Translational Research - PhD and Graduate Certificate". http://www.med.monash.edu.au/. Monash University. Retrieved 17 June 2015. External link in

|website=(help) - Jump up ^ "MPhil in Translational Research". http://www.di.uq.edu.au/ /. University of Queensland Diamantina Institute. Retrieved 17 June 2015. External link in

|website=(help) - Jump up ^ "Clinical and Translational Research". http://medschool.duke.edu/. Duke University. Retrieved 17 June 2015. External link in

|website=(help) - Jump up ^ "Center for Clinical and Translational Science". http://medschool.creighton.edu/. Creighton University. Retrieved 14 July 2015. External link in

|website=(help)

Social behavior

From Wikipedia, the free encyclopedia

Social behavior is behavior among two or more organisms, typically from the same species. Social behavior is exhibited by a wide range of organisms including social bacteria, slime moulds, social insects, social shrimp, naked mole-rats, and humans.[1]

Research has shown that different animals, including humans, share the similar types of social behaviour such as aggression and bonding. This can also be the same with species with a primitive brain such as ants. Even though humans and animals share some aspects of social behaviour with species such as ants, human social behaviour is much more complicated.[3]

There are many reasons to what triggers violence abuse, these include the following:

Contents

[hide]In sociology[edit]

Sociology is the scientific or academic study of social behavior, including its origins, development, organization, and institutions.[2]Research has shown that different animals, including humans, share the similar types of social behaviour such as aggression and bonding. This can also be the same with species with a primitive brain such as ants. Even though humans and animals share some aspects of social behaviour with species such as ants, human social behaviour is much more complicated.[3]

In psychology[edit]

Social psychology is the scientific study of how people's thoughts, feelings, and behaviors are influenced by the actual, imagined, or implied presence of others.[4] In psychology, social behaviour is referred to human behaviour. It covers behaviours ranging from physical to emotional that we communicate in and also the way we are influenced by ethics, attitudes, genetics and culture etc.[5]Types[edit]

The types of social behaviour include the following:Aggressive behaviour[edit]

Violent and bullying behaviour are two types of aggressive behaviour, their outcomes are extremely similar. These outcomes include affiliation, gaining attention, power and control.[6] Aggressive behaviour is a type of social behaviour that can potentially cause or threaten physical or emotional harm. People who suffer from aggressive behaviour are most likely to be irritable, impulsive and restless hence why this type of behaviour can range from verbal abuse to damaging victim property. Although, an outburst of aggression is highly common. Aggressive behaviour on the other hand is always deliberate, and occurs either habitually or in a pattern. The one way to handle aggressive behaviour is to understand what the cause is, Below is what can influence aggressive behaviour:- Family structure

- Relationships

- Work or school environment

- Health conditions

- Psychiatric issues

- Life issues

In children[edit]

Poor parenting skills is one of the most common reasons why children are aggressive. Biological factors and lack of relationship skills are a couple to name. As children grow up, in many cases they tend to imitate behaviour from their elders such as violence or aggression. Aggressive behaviour can be irritating, and to stop a child from doing such, they receive attention for it from their parents, teachers or peers. However, there can be times where parents aren't aware of when such behaviour is occurring and unknowingly reward it; they are encouraging the child. Aggressive behaviour can lead onto bipolar disorders.[7]In adults[edit]

Adults can also suffer from aggressive behaviour, these can develop over time, from undesirable life experiences or an illness. Disorders such as depression, anxiety or post traumatic stress disorder tend to have aggressive behaviour but this is unintentionally exposed. However, those without any recent underlying medical or emotional disorder, frustration is the answer to their aggressive behaviour. Emotional behaviour can also trigger aggression when someone stops caring about others.[7]Violent behaviour[edit]

An individual that threatens or physically harms another individual is classified as violent behaviour. Violent behaviour usually starts of with verbal abuse but then escalates to physical harm such as hitting or hurting.In children[edit]

Violence is learned behaviour, just like aggressive behaviour, children imitate what they see from their elders hence why it is necessary to help your children understand that violence is not the best way to resolve conflict. You can always set a good example by resolving conflict in a calm manner. Violence such as spanking, pinching, shoving or even choking etc., should never be used to discipline your child.There are many reasons to what triggers violence abuse, these include the following:

- Childhood abuse

- History of violent behaviour

- Use of drugs such as Cocaine

- History of arrests

- Mental health problems, bipolar disorder

- Presence of firearms in the household

- Generic factors

- Brain damage from accident

- Exposure to violence in media

- Socioeconomic factors such as poverty, single parenting, martial breakups, unemployment etc.[8]

Behavioural and developmental disorders[edit]

Main article: Emotional and behavioral disorders

- Expressive language disorder, a condition where the child/adult has an issue with expressing itself in speech.

- Seizure disorder, a neurological disorder that can possibly cause spasms minor physical signs or several symptoms combined that cannot be controlled by the brain.

- Down syndrome, a chromosomal abnormality condition that changes over the course of development.

- Attention deficit disorder, a common neurobehavioral disorder that has problems with over-activity, impulsivity, inattentiveness or possibly even a combination.

- Bipolar disorder, a form of mood disorder characterised by a variation of moods that can change within minutes.

- Autism spectrum disorder, a condition that affects social interaction, interests, behaviour and communication.[10]

- Cerebral palsy, a condition caused by the brain that activates shortly after birth. It affects movement, abnormal speech, hearing and visual impairments and mental retardation.[11]

See also[edit]

References[edit]

- Jump up ^ "Social Behavior - Biology Encyclopedia - body, examples, animal, different, life, structure, make, first". www.biologyreference.com. Retrieved 2015-11-02.

- Jump up ^ sociology. (n.d.). The American Heritage Science Dictionary. Retrieved 13 July 2013, from Dictionary.com website: http://dictionary.reference.com/browse/sociology

- Jump up ^ Genetic Determinants of Self Identity and Social Recognition in Bacteria. Washington: Karine A. Gibbs, Mark L. Urbanowski, E. Peter Greenberg. 2008. pp. 256–9.

- Jump up ^ Allport, G. W (1985). "The historical background of social psychology". In Lindzey, G; Aronson, E. The Handbook of Social Psychology. New York: McGraw Hill.p.5

- Jump up ^ "Psychology Glossary. Psychology definitions in plain English.". www.alleydog.com. Retrieved 2015-11-04.

- Jump up ^ Zirpoli, T.J (2008). "Excerpt from Behavior Management: Applications for Teachers,". http://www.education.com/reference/article/aggressive-behavior/: 1. External link in

|journal=(help) - ^ Jump up to: a b "Aggressive Behavior". Healthline. Retrieved 2015-11-03.

- Jump up ^ "Understanding Violent Behavior In Children and Adolescents". www.aacap.org. Retrieved 2015-11-03.

- Jump up ^ "Violent Behaviour - HealthLinkBC". www.healthlinkbc.ca. Retrieved 2015-11-03.

- Jump up ^ Choices, NHS. "Autism spectrum disorder - NHS Choices". www.nhs.uk. Retrieved 2015-11-02.

- Jump up ^ "Other Developmental Disorders". www.firstsigns.org. Retrieved 2015-11-02.

|

Documentation science

From Wikipedia, the free encyclopedia

| This article includes a list of references, related reading or external links, but its sources remain unclear because it lacks inline citations. (January 2014) |

Paul Otlet (1868–1944) and Henri La Fontaine (1854–1943), both Belgian lawyers and peace activists, established documentation science as a field of study. Otlet, who coined the term documentation science, is the author of two treatises on the subject: Traité de Documentation (1934) and Monde: Essai d'universalisme (1935). He, in particular, is regarded as the progenitor of information science.

In the United States, 1968 was a landmark year in the transition from documentation science to information science: the American Documentation Institute became the American Society for Information Science and Technology, and Harold Borko introduced readers of the journal American Documentation to the term in his paper "Information science: What is it?". Information science has not entirely subsumed documentation science, however. Berard (2003, p. 148) writes that word documentation is still much used in Francophone countries, where it is synonymous with information science. One potential explanation is that these countries made a clear division of labour between libraries and documentation centres, and the personnel employed at each kind of institution have different educational backgrounds. Documentation science professionals are called documentalists.

Contents

[hide]Developments[edit]

1931: The International Institute for Documentation, (Institut International de Documentation, IID) was the new name for the International Institute of Bibliography (originally Institut International de Bibliographie, IIB) established on 12 September 1895, in Brussels.1937: American Documentation Institute was founded (1968 nameshift to American Society for Information Science).

1948: S. R. Ranganathan "discovers" documentation.[2]

1965-1990: Documentation departments were established in, for example, large research libraries with the appearance of commercial online computer retrieval systems. The persons doing the searches for clients were termed documentalists. With the appearance of first CD-ROM databases and later the internet these intermediary searches have decreased and most such departments have been closed or merged with other departments. (This is perhaps a European terminology, in the USA the term Information Centers was often used).

1986: Information service and - management started under the name "Bibliotheek en Documentaire Informatieverzorging" as third level education in The Netherlands.

1996: "Dokvit", Documentation Studies, was established in 1996 at the University of Tromsø in Norway (see Windfeld Lund, 2007).

2002: The Document Academy,[3] an international network chaired and cosponsored by The Program of Documentation Studies, University of Tromsoe, Norway and The School of Information Management and Systems, UC Berkeley.

2003: Document Research Conference (DOCAM) is a series of conferences made by the Document Academy. DOCAM '03 (2003) was The first conference in the series. It was held August 13–15, 2003 at The School of Information Management and Systems (SIMS) at the University of California, Berkeley.(See http://thedocumentacademy.org/?q=node/4 ).

2004: The term Library, information and documentation studies (LID) has been suggested as an alternative to Library and information science (LIS), (cf., Rayward et al., 2004)

Document versus information[edit]

|

Especially since the 1990s there has, however, been strong arguments put forward to revive the concept of document as the basic theoretical construct. Buckland (1991), Hjørland (2000) and others have for years been arguing that the concept of document is the most fruitful one to consider as the core concept in LIS. The concept of document is understood as "any concrete or symbolic indication, preserved or recorded, for reconstructing or for proving a phenomenon, whether physical or mental (Briet, 1951, 7; here quoted from Buckland, 1991). Recently additions to that view are Frohmann (2004), Furner (2004), Konrad (2007) and Ørom (2007). Frohmann (2004) discusses how the idea of information as the abstract object sought, processed, communicated and synthesized sets the stage for a paradox of the scientific literature by simultaneously supporting and undermining its significance for research front work. Furner (2004) argues that all the problems we need to consider in information studies can be dealt with without any need for a concept of information. All these authors assume that the concept of document is a more precise description of the objects that information science is about.

See also[edit]

- Document

- Documentation Research and Training Centre

- Information science#European documentation

- Institut für Dokumentologie und Editorik

- International Federation for Information and Documentation

- Journal of Documentation

- Library and information science

- Memory institution

- Mundaneum

- Subject (documents)

- Suzanne Briet

- World Congress of Universal Documentation

References[edit]

- Jump up ^ Rayward, W. B. (1994). "Visions of Xanadu: Paul Otlet (1868-1944) and hypertext". Journal of the American Society for Information Science 45 (4): 235–250. doi:10.1002/(SICI)1097-4571(199405)45:4<235::AID-ASI2>3.0.CO;2-Y.

- Jump up ^ Ranganathan, S.R. (1950). Library tour 1948. Europe and America, impresions and reflections. London: G.Blunt.

- Jump up ^ The Document Academy

Further reading[edit]

- Berard, R. (2003). Documentation. IN: International Encyclopedia of Information and Library Science. 2nd. ed. Ed. by John Feather & Paul Sturges. London: Routledge (pp. 147–149).

- Bradford, S. C. (1948). Documentation. London: Crosby Lockwood.

- Bradford, S. C. (1953). Documentation. 2nd ed. London: Crosby Lockwood.

- Briet, Suzanne (1951). Qu'est-ce que la documentation? Paris: Editions Documentaires Industrielle et Techniques.

- Briet, Suzanne, 2006. What is Documentation? English Translation of the Classic French Text. Transl. and ed. by Ronald E. Day and Laurent Martinet. Lanham, MD: Scarecrow Press.

- Buckland, Michael, 1996. Documentation, Information Science, and Library Science in the U.S.A. Information Processing & Management 32, 63-76. Reprinted in Historical Studies in Information Science, eds. Trudi B. Hahn, and Michael Buckland. Medford, NJ: Information Today, 159- 172.

- Buckland, Michael (2007). Northern Light: Fresh Insights into Enduring Concerns. In: Document (re)turn. Contributions from a research field in transition. Ed. By Roswitha Skare, Niels Windfeld Lund & Andreas Vårheim. Frankfurt am Main: Peter Lang. (pp. 315–322). Retrieved 2011-10-16 from: http://people.ischool.berkeley.edu/~buckland/tromso07.pdf

- Farkas-Conn, I. S. (1990). From Documentation to Information Science. The Beginnings and Early Development of the American Documentation Institute - American Society for information Science. Westport, CT: Greenwood Press.

- Frohmann, Bernd, 2004. Deflating Information: From Science Studies to Documentation. Toronto; Buffalo; London: University of Toronto Press.

- Garfield, E. (1953). Librarian versus documentalist. Manuscript submitted to Special Libraries. http://www.garfield.library.upenn.edu/papers/librarianvsdocumentalisty1953.html

- Graziano, E. E. (1968). On a theory of documentation. American Documentalist 19, 85-89.

- Hjørland, Birger (2000). Documents, memory institutions and information science. JOURNAL OF DOCUMENTATION, 56(1), 27-41. Retrieved 2013-02-17 from: http://iva.dk/bh/Core%20Concepts%20in%20LIS/articles%20a-z/Documents_memory%20institutions%20and%20IS.pdf

- Konrad, A. (2007). On inquiry: Human concept formation and construction of meaning through library and information science intermediation (Unpublished doctoral dissertation). University of California, Berkeley. Retrieved from http://escholarship.org/uc/item/1s76b6hp

- W. Boyd Rayward; Hansson,Joacim & Suominen, Vesa (eds). (2004). Aware and Responsible: Papers of the Nordic-International Colloquium on Social and Cultural Awareness and Responsibility in Library, Information and Documentation Studies. Lanham, MD: Scarecrow Press. (pp. 71–91). http://www.db.dk/binaries/social%20and%20cultural%20awareness.pdf

- Simon, E. N. (1947). A novice on "documentation". Journal of Documentation, 3(2), 238-341.

- Williams, R. V. (1998). The Documentation and Special Libraries Movement in the United States, 1910-1960. IN: Hahn, T. B. & Buckland, M. (eds.): Historical Studies in Information Science. Medford, NJ: Information Today, Inc. (pp. 173–180).

- Windfeld Lund, Niels, 2004. Documentation in a Complementary Perspective. In Aware and responsible: Papers of the Nordic-International Colloquium on Social and Cultural Awareness and Responsibility in Library, Information and Documentation Studies (SCARLID), ed. Rayward, Lanham, Md.: Scarecrow Press, 93-102.

- Windfeld Lund, Niels (2007). Building a Discipline, Creating a Profession: An Essay on the Childhood of "Dokvit". IN: Document (re)turn. Contributions from a research field in transition. Ed. By Roswitha Skare, Niels Windfeld Lund & Andreas Vårheim. Frankfurt am Main: Peter Lang. (pp. 11–26). Retrieved 2011-10-16 from: http://www.ub.uit.no/munin/bitstream/handle/10037/966/paper.pdf?sequence=1

- Windfeld Lund, Niels (2009). Document Theory. ANNUAL REVIEW OF INFORMATION SCIENCE AND TECHNOLOGY, 43, 399-432.

- Woledge, G. (1983). Bibliography and Documentation - Words and Ideas. Journal of Documentation, 39(4), 266-279.

- Ørom, Anders (2007). The concept of information versus the concept of document. IN: Document (re)turn. Contributions from a research field in transition. Ed. By Roswitha Skare, Niels Windfeld Lund & Andreas Vårheim. Frankfurt am Main: Peter Lang. (pp. 53–72).

Information retrieval

From Wikipedia, the free encyclopedia

| Information science |

|---|

| General aspects |

| Related fields and sub-fields |

Automated information retrieval systems are used to reduce what has been called "information overload". Many universities and public libraries use IR systems to provide access to books, journals and other documents. Web search engines are the most visible IR applications.

Contents

[hide]Overview[edit]

An information retrieval process begins when a user enters a query into the system. Queries are formal statements of information needs, for example search strings in web search engines. In information retrieval a query does not uniquely identify a single object in the collection. Instead, several objects may match the query, perhaps with different degrees of relevancy.An object is an entity that is represented by information in a content collection or database. User queries are matched against the database information. However, as opposed to classical SQL queries of a database, in information retrieval the results returned may or may not match the query, so results are typically ranked. This ranking of results is a key difference of information retrieval searching compared to database searching.[1]

Depending on the application the data objects may be, for example, text documents, images,[2] audio,[3] mind maps[4] or videos. Often the documents themselves are not kept or stored directly in the IR system, but are instead represented in the system by document surrogates or metadata.

Most IR systems compute a numeric score on how well each object in the database matches the query, and rank the objects according to this value. The top ranking objects are then shown to the user. The process may then be iterated if the user wishes to refine the query.[5]

History[edit]

| “ | there is ... a machine called the Univac ... whereby letters and figures are coded as a pattern of magnetic spots on a long steel tape. By this means the text of a document, preceded by its subject code symbol, can be recorded ... the machine ... automatically selects and types out those references which have been coded in any desired way at a rate of 120 words a minute | ” |

— J. E. Holmstrom, 1948

| ||

In 1992, the US Department of Defense along with the National Institute of Standards and Technology (NIST), cosponsored the Text Retrieval Conference (TREC) as part of the TIPSTER text program. The aim of this was to look into the information retrieval community by supplying the infrastructure that was needed for evaluation of text retrieval methodologies on a very large text collection. This catalyzed research on methods that scale to huge corpora. The introduction of web search engines has boosted the need for very large scale retrieval systems even further.

Model types[edit]

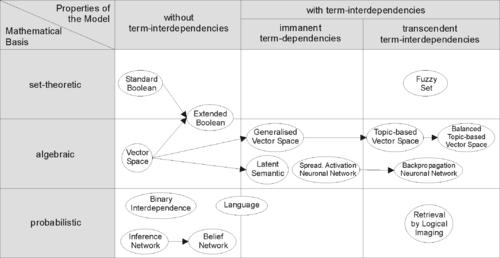

First dimension: mathematical basis[edit]

- Set-theoretic models represent documents as sets of words or phrases. Similarities are usually derived from set-theoretic operations on those sets. Common models are:

- Algebraic models represent documents and queries usually as vectors, matrices, or tuples. The similarity of the query vector and document vector is represented as a scalar value.

- Probabilistic models treat the process of document retrieval as a probabilistic inference. Similarities are computed as probabilities that a document is relevant for a given query. Probabilistic theorems like the Bayes' theorem are often used in these models.

- Binary Independence Model

- Probabilistic relevance model on which is based the okapi (BM25) relevance function

- Uncertain inference

- Language models

- Divergence-from-randomness model

- Latent Dirichlet allocation

- Feature-based retrieval models view documents as vectors of values of feature functions (or just features) and seek the best way to combine these features into a single relevance score, typically by learning to rank methods. Feature functions are arbitrary functions of document and query, and as such can easily incorporate almost any other retrieval model as just another feature.

Second dimension: properties of the model[edit]

- Models without term-interdependencies treat different terms/words as independent. This fact is usually represented in vector space models by the orthogonality assumption of term vectors or in probabilistic models by an independency assumption for term variables.

- Models with immanent term interdependencies allow a representation of interdependencies between terms. However the degree of the interdependency between two terms is defined by the model itself. It is usually directly or indirectly derived (e.g. by dimensional reduction) from the co-occurrence of those terms in the whole set of documents.

- Models with transcendent term interdependencies allow a representation of interdependencies between terms, but they do not allege how the interdependency between two terms is defined. They rely an external source for the degree of interdependency between two terms. (For example, a human or sophisticated algorithms.)

Performance and correctness measures[edit]

Further information: Category:Information retrieval evaluation

The evaluation of an information retrieval system is the process of assessing how well a system meets the information needs of its users. Traditional evaluation metrics, designed for Boolean retrieval or top-k retrieval, include precision and recall. Many more measures for evaluating the performance of information retrieval systems have also been proposed. In general, measurement considers a collection of documents to be searched and a search query. All common measures described here assume a ground truth notion of relevancy: every document is known to be either relevant or non-relevant to a particular query. In practice, queries may be ill-posed and there may be different shades of relevancy.Virtually all modern evaluation metrics (e.g., mean average precision, discounted cumulative gain) are designed for ranked retrieval without any explicit rank cutoff, taking into account the relative order of the documents retrieved by the search engines and giving more weight to documents returned at higher ranks.[citation needed]

The mathematical symbols used in the formulas below mean:

- Intersection - in this case, specifying the documents in both sets X and Y

- Intersection - in this case, specifying the documents in both sets X and Y - Cardinality - in this case, the number of documents in set X

- Cardinality - in this case, the number of documents in set X - Integral

- Integral - Summation

- Summation - Symmetric difference

- Symmetric difference

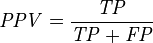

Precision[edit]

Main article: Precision and recall

Precision is the fraction of the documents retrieved that are relevant to the user's information need.Note that the meaning and usage of "precision" in the field of Information Retrieval differs from the definition of accuracy and precision within other branches of science and statistics.

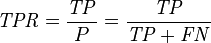

Recall[edit]

Main article: Precision and recall

Recall is the fraction of the documents that are relevant to the query that are successfully retrieved.It is trivial to achieve recall of 100% by returning all documents in response to any query. Therefore, recall alone is not enough but one needs to measure the number of non-relevant documents also, for example by computing the precision.

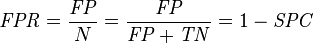

Fall-out[edit]

The proportion of non-relevant documents that are retrieved, out of all non-relevant documents available: . It can be looked at as the probability that a non-relevant document is retrieved by the query.

. It can be looked at as the probability that a non-relevant document is retrieved by the query.It is trivial to achieve fall-out of 0% by returning zero documents in response to any query.

F-score / F-measure[edit]

Main article: F-score

The weighted harmonic mean of precision and recall, the traditional F-measure or balanced F-score is: measure, because recall and precision are evenly weighted.

measure, because recall and precision are evenly weighted.The general formula for non-negative real

is:

is: measure, which weights recall twice as much as precision, and the

measure, which weights recall twice as much as precision, and the  measure, which weights precision twice as much as recall.

measure, which weights precision twice as much as recall.The F-measure was derived by van Rijsbergen (1979) so that

"measures the effectiveness of retrieval with respect to a user who attaches

"measures the effectiveness of retrieval with respect to a user who attaches  times as much importance to recall as precision". It is based on van Rijsbergen's effectiveness measure

times as much importance to recall as precision". It is based on van Rijsbergen's effectiveness measure  . Their relationship is:

. Their relationship is: where

where

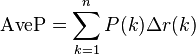

Average precision[edit]

Precision and recall are single-value metrics based on the whole list of documents returned by the system. For systems that return a ranked sequence of documents, it is desirable to also consider the order in which the returned documents are presented. By computing a precision and recall at every position in the ranked sequence of documents, one can plot a precision-recall curve, plotting precision as a function of recall

as a function of recall  . Average precision computes the average value of

. Average precision computes the average value of  over the interval from

over the interval from  to

to  :[9]

:[9] is the rank in the sequence of retrieved documents,

is the rank in the sequence of retrieved documents,  is the number of retrieved documents,

is the number of retrieved documents,  is the precision at cut-off

is the precision at cut-off  in the list, and

in the list, and  is the change in recall from items

is the change in recall from items  to

to  .[9]

.[9]This finite sum is equivalent to:

is an indicator function equaling 1 if the item at rank

is an indicator function equaling 1 if the item at rank  is a relevant document, zero otherwise.[10] Note that the average is over all relevant documents and the relevant documents not retrieved get a precision score of zero.

is a relevant document, zero otherwise.[10] Note that the average is over all relevant documents and the relevant documents not retrieved get a precision score of zero.Some authors choose to interpolate the

function to reduce the impact of "wiggles" in the curve.[11][12] For example, the PASCAL Visual Object Classes challenge (a benchmark for computer vision object detection) computes average precision by averaging the precision over a set of evenly spaced recall levels {0, 0.1, 0.2, ... 1.0}:[11][12]

function to reduce the impact of "wiggles" in the curve.[11][12] For example, the PASCAL Visual Object Classes challenge (a benchmark for computer vision object detection) computes average precision by averaging the precision over a set of evenly spaced recall levels {0, 0.1, 0.2, ... 1.0}:[11][12] is an interpolated precision that takes the maximum precision over all recalls greater than

is an interpolated precision that takes the maximum precision over all recalls greater than  :

: .

.

function by assuming a particular parametric distribution for the underlying decision values. For example, a binormal precision-recall curve can be obtained by assuming decision values in both classes to follow a Gaussian distribution.[13]

function by assuming a particular parametric distribution for the underlying decision values. For example, a binormal precision-recall curve can be obtained by assuming decision values in both classes to follow a Gaussian distribution.[13]Precision at K[edit]

For modern (Web-scale) information retrieval, recall is no longer a meaningful metric, as many queries have thousands of relevant documents, and few users will be interested in reading all of them. Precision at k documents (P@k) is still a useful metric (e.g., P@10 or "Precision at 10" corresponds to the number of relevant results on the first search results page), but fails to take into account the positions of the relevant documents among the top k.[citation needed] Another shortcoming is that on a query with fewer relevant results than k, even a perfect system will have a score less than 1.[14] It easier to score manually since only the top k results need to be examined to determine if they are relevant or not.R-Precision[edit]

R-precision requires knowing all documents that are relevant to a query. The number of relevant documents, , is used as the cutoff for calculation, and this varies from query to query. For example, if there are 15 documents relevant to "red" in a corpus (R=15), R-precision for "red" looks at the top 15 documents returned, counts the number that are relevant

, is used as the cutoff for calculation, and this varies from query to query. For example, if there are 15 documents relevant to "red" in a corpus (R=15), R-precision for "red" looks at the top 15 documents returned, counts the number that are relevant  turns that into a relevancy fraction:

turns that into a relevancy fraction:  .[15]

.[15]Precision is equal to recall at the R-th position.[14]

Empirically, this measure is often highly correlated to mean average precision.[14]

Mean average precision[edit]

Mean average precision for a set of queries is the mean of the average precision scores for each query.Discounted cumulative gain[edit]

Main article: Discounted cumulative gain

DCG uses a graded relevance scale of documents from the result set to evaluate the usefulness, or gain, of a document based on its position in the result list. The premise of DCG is that highly relevant documents appearing lower in a search result list should be penalized as the graded relevance value is reduced logarithmically proportional to the position of the result.The DCG accumulated at a particular rank position

is defined as:

is defined as: ), which normalizes the score:

), which normalizes the score: will be the same as the

will be the same as the  producing an nDCG of 1.0. All nDCG calculations are then relative values on the interval 0.0 to 1.0 and so are cross-query comparable.

producing an nDCG of 1.0. All nDCG calculations are then relative values on the interval 0.0 to 1.0 and so are cross-query comparable.Other measures[edit]

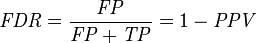

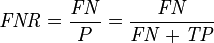

Informedness = Sensitivity + Specificity - 1Markedness = Precision + NPV - 1

Sources: Fawcett (2006) and Powers (2011).[16][17] |

- Mean reciprocal rank

- Spearman's rank correlation coefficient

- bpref - a summation-based measure of how many relevant documents are ranked before irrelevant documents[15]

- GMAP - geometric mean of (per-topic) average precision[15]

- Measures based on marginal relevance and document diversity - see Relevance (information retrieval) § Problems and alternatives

Visualization[edit]

Visualizations of information retrieval performance include:- Graphs which chart precision on one axis and recall on the other[15]

- Histograms of average precision over various topics[15]

- Receiver operating characteristic (ROC curve)

- Confusion matrix

Timeline[edit]

- Before the 1900s

- 1801: Joseph Marie Jacquard invents the Jacquard loom, the first machine to use punched cards to control a sequence of operations.

- 1880s: Herman Hollerith invents an electro-mechanical data tabulator using punch cards as a machine readable medium.

- 1890 Hollerith cards, keypunches and tabulators used to process the 1890 US Census data.

- 1920s-1930s

- Emanuel Goldberg submits patents for his "Statistical Machine” a document search engine that used photoelectric cells and pattern recognition to search the metadata on rolls of microfilmed documents.

- 1940s–1950s

- late 1940s: The US military confronted problems of indexing and retrieval of wartime scientific research documents captured from Germans.

- 1945: Vannevar Bush's As We May Think appeared in Atlantic Monthly.

- 1947: Hans Peter Luhn (research engineer at IBM since 1941) began work on a mechanized punch card-based system for searching chemical compounds.

- 1950s: Growing concern in the US for a "science gap" with the USSR motivated, encouraged funding and provided a backdrop for mechanized literature searching systems (Allen Kent et al.) and the invention of citation indexing (Eugene Garfield).

- 1950: The term "information retrieval" appears to have been coined by Calvin Mooers.[18]

- 1951: Philip Bagley conducted the earliest experiment in computerized document retrieval in a master thesis at MIT.[19]

- 1955: Allen Kent joined Case Western Reserve University, and eventually became associate director of the Center for Documentation and Communications Research. That same year, Kent and colleagues published a paper in American Documentation describing the precision and recall measures as well as detailing a proposed "framework" for evaluating an IR system which included statistical sampling methods for determining the number of relevant documents not retrieved.[20]

- 1958: International Conference on Scientific Information Washington DC included consideration of IR systems as a solution to problems identified. See: Proceedings of the International Conference on Scientific Information, 1958 (National Academy of Sciences, Washington, DC, 1959)

- 1959: Hans Peter Luhn published "Auto-encoding of documents for information retrieval."

- late 1940s: The US military confronted problems of indexing and retrieval of wartime scientific research documents captured from Germans.

- 1960s:

- early 1960s: Gerard Salton began work on IR at Harvard, later moved to Cornell.

- 1960: Melvin Earl Maron and John Lary Kuhns[21] published "On relevance, probabilistic indexing, and information retrieval" in the Journal of the ACM 7(3):216–244, July 1960.

- 1962:

- Cyril W. Cleverdon published early findings of the Cranfield studies, developing a model for IR system evaluation. See: Cyril W. Cleverdon, "Report on the Testing and Analysis of an Investigation into the Comparative Efficiency of Indexing Systems". Cranfield Collection of Aeronautics, Cranfield, England, 1962.

- Kent published Information Analysis and Retrieval.

- 1963:

- Weinberg report "Science, Government and Information" gave a full articulation of the idea of a "crisis of scientific information." The report was named after Dr. Alvin Weinberg.

- Joseph Becker and Robert M. Hayes published text on information retrieval. Becker, Joseph; Hayes, Robert Mayo. Information storage and retrieval: tools, elements, theories. New York, Wiley (1963).

- 1964:

- Karen Spärck Jones finished her thesis at Cambridge, Synonymy and Semantic Classification, and continued work on computational linguistics as it applies to IR.

- The National Bureau of Standards sponsored a symposium titled "Statistical Association Methods for Mechanized Documentation." Several highly significant papers, including G. Salton's first published reference (we believe) to the SMART system.

- mid-1960s:

- National Library of Medicine developed MEDLARS Medical Literature Analysis and Retrieval System, the first major machine-readable database and batch-retrieval system.

- Project Intrex at MIT.

- 1965: J. C. R. Licklider published Libraries of the Future.

- 1966: Don Swanson was involved in studies at University of Chicago on Requirements for Future Catalogs.

- late 1960s: F. Wilfrid Lancaster completed evaluation studies of the MEDLARS system and published the first edition of his text on information retrieval.

- 1968:

- Gerard Salton published Automatic Information Organization and Retrieval.

- John W. Sammon, Jr.'s RADC Tech report "Some Mathematics of Information Storage and Retrieval..." outlined the vector model.

- 1969: Sammon's "A nonlinear mapping for data structure analysis" (IEEE Transactions on Computers) was the first proposal for visualization interface to an IR system.

- 1970s

- early 1970s:

- First online systems—NLM's AIM-TWX, MEDLINE; Lockheed's Dialog; SDC's ORBIT.

- Theodor Nelson promoting concept of hypertext, published Computer Lib/Dream Machines.

- 1971: Nicholas Jardine and Cornelis J. van Rijsbergen published "The use of hierarchic clustering in information retrieval", which articulated the "cluster hypothesis."[22]

- 1975: Three highly influential publications by Salton fully articulated his vector processing framework and term discrimination model:

- 1978: The First ACM SIGIR conference.

- 1979: C. J. van Rijsbergen published Information Retrieval (Butterworths). Heavy emphasis on probabilistic models.

- 1979: Tamas Doszkocs implemented the CITE natural language user interface for MEDLINE at the National Library of Medicine. The CITE system supported free form query input, ranked output and relevance feedback.[23]

- early 1970s:

- 1980s

- 1980: First international ACM SIGIR conference, joint with British Computer Society IR group in Cambridge.

- 1982: Nicholas J. Belkin, Robert N. Oddy, and Helen M. Brooks proposed the ASK (Anomalous State of Knowledge) viewpoint for information retrieval. This was an important concept, though their automated analysis tool proved ultimately disappointing.

- 1983: Salton (and Michael J. McGill) published Introduction to Modern Information Retrieval (McGraw-Hill), with heavy emphasis on vector models.

- 1985: David Blair and Bill Maron publish: An Evaluation of Retrieval Effectiveness for a Full-Text Document-Retrieval System

- mid-1980s: Efforts to develop end-user versions of commercial IR systems.

- 1985–1993: Key papers on and experimental systems for visualization interfaces.

- Work by Donald B. Crouch, Robert R. Korfhage, Matthew Chalmers, Anselm Spoerri and others.

- 1989: First World Wide Web proposals by Tim Berners-Lee at CERN.

- 1990s

- 1992: First TREC conference.

- 1997: Publication of Korfhage's Information Storage and Retrieval[24] with emphasis on visualization and multi-reference point systems.

- late 1990s: Web search engines implementation of many features formerly found only in experimental IR systems. Search engines become the most common and maybe best instantiation of IR models.

Awards in the field[edit]

See also[edit]

References[edit]

- Jump up ^ Jansen, B. J. and Rieh, S. (2010) The Seventeen Theoretical Constructs of Information Searching and Information Retrieval. Journal of the American Society for Information Sciences and Technology. 61(8), 1517-1534.

- Jump up ^ Goodrum, Abby A. (2000). "Image Information Retrieval: An Overview of Current Research". Informing Science 3 (2).

- Jump up ^ Foote, Jonathan (1999). "An overview of audio information retrieval". Multimedia Systems (Springer).

- Jump up ^ Beel, Jöran; Gipp, Bela; Stiller, Jan-Olaf (2009). "Proceedings of the 5th International Conference on Collaborative Computing: Networking, Applications and Worksharing (CollaborateCom'09)". Washington, DC: IEEE.

|contribution=ignored (help) - Jump up ^ Frakes, William B. (1992). Information Retrieval Data Structures & Algorithms. Prentice-Hall, Inc. ISBN 0-13-463837-9.

- ^ Jump up to: a b Singhal, Amit (2001). "Modern Information Retrieval: A Brief Overview" (PDF). Bulletin of the IEEE Computer Society Technical Committee on Data Engineering 24 (4): 35–43.

- Jump up ^ Mark Sanderson & W. Bruce Croft (2012). "The History of Information Retrieval Research". Proceedings of the IEEE 100: 1444–1451. doi:10.1109/jproc.2012.2189916.

- Jump up ^ JE Holmstrom (1948). "‘Section III. Opening Plenary Session". The Royal Society Scientific Information Conference, 21 June-2 July 1948: report and papers submitted: 85.

- ^ Jump up to: a b Zhu, Mu (2004). "Recall, Precision and Average Precision" (PDF).

- Jump up ^ Turpin, Andrew; Scholer, Falk (2006). "User performance versus precision measures for simple search tasks". Proceedings of the 29th Annual international ACM SIGIR Conference on Research and Development in information Retrieval (Seattle, WA, August 06–11, 2006) (New York, NY: ACM): 11–18. doi:10.1145/1148170.1148176. ISBN 1-59593-369-7.

- ^ Jump up to: a b Everingham, Mark; Van Gool, Luc; Williams, Christopher K. I.; Winn, John; Zisserman, Andrew (June 2010). "The PASCAL Visual Object Classes (VOC) Challenge" (PDF). International Journal of Computer Vision (Springer) 88 (2): 303–338. doi:10.1007/s11263-009-0275-4. Retrieved 2011-08-29.

- ^ Jump up to: a b Manning, Christopher D.; Raghavan, Prabhakar; Schütze, Hinrich (2008). Introduction to Information Retrieval. Cambridge University Press.

- Jump up ^ K.H. Brodersen, C.S. Ong, K.E. Stephan, J.M. Buhmann (2010). The binormal assumption on precision-recall curves. Proceedings of the 20th International Conference on Pattern Recognition, 4263-4266.

- ^ Jump up to: a b c Christopher D. Manning, Prabhakar Raghavan and Hinrich Schütze (2009). "Chapter 8: Evaluation in information retrieval" (PDF). Retrieved 2015-06-14. Part of Introduction to Information Retrieval [1]

- ^ Jump up to: a b c d e http://trec.nist.gov/pubs/trec15/appendices/CE.MEASURES06.pdf